Information about the book

AUTHOR: Nick Riemer

TITLE: Introducing Semantics

PUBLISHER: Cambridge University Press

YEAR: 2010

Pages: 460

REVIEWER: Mehdi ZOUAOUI, Instructor

Summary

Introducing Semantics is a book that any serious aspiring researcher in linguistics should get down to and gain a solid background in the dynamics of this science. This academic work is the product of Dr. Nick Riemer, a senior lecturer at the University of Sydney, who has a major ınterest in the history of language, linguistics and semantics. Riemer divided the book into 11 chapters, with each explaining aspects related to semantics that we will be going over.

1- Meaning in the empirical study of language

The author begins the book by giving an overview about the definition and the scope of semantics and lays down the foundational questions that semanticists try to answer:

With these questions in hand, semantics has several sub-fields and one of the most important ones are:

Lexical semantics: which is the study of word meaning.

Phrasal semantics: which is the study of the principles which govern the construction of the meaning of phrases and of sentence meaning out of compositional combinations of individual lexemes.

It is important not to confuse semantics with pragmatics. The latter studies the basic literal meanings of words as considered as parts of a language system. The former concentrates on how these basic meanings are assigned referents in different contexts, and the differing (ironic, metaphorical,.) uses to which language undergoes.

So, meaning is the fulcrum of this book and whenever we talk about the notion of meaning in English, we are interacting with it into three ways:

Meaning: describing meaning by emphasizing the speaker’s intentions

Use: description in terms of use limits itself to a consideration of equivalences between the piece of language in question and an assumed norm.

Truth: that emphasizes the objective facts of the situation by concentrating on the relation between language and reality.

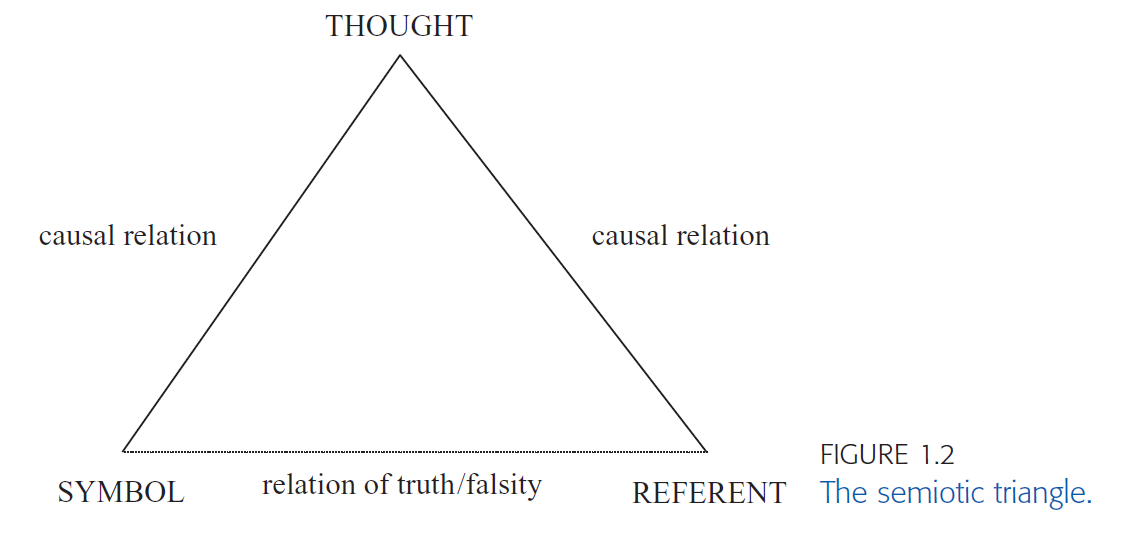

In order to foreground the dynamics between language, mind, world and meaning, the author introduces the famous semiotic triangle (see figure 2 in the appendix), often called the triangle of reference or meaning, first published in The Meaning of Meaning book (1923) by Ogden and Richards. The semiotic triangle comprises the following elements:

Thoughts: which reflects that it came from a human being and therefore a brain product.

Symbol: whatever perceptible token we choose to express the speaker’s intended meaning.

Referents: whatever things, events, or situations in the world the language is about.

The author then provides an account of circular definition and suggests some substitutes to get over this issue. The first one is to shift gears towards the referent or denotation as the major component of meaning. However, one problem of referential theory is that there are some words that are hard to invoke in the real world, such as abstract words, and grammatical ones. Another suggestion is to look at meaning as a fixed conceptual or mental representation instantiated in our minds in some stable, finite medium, and which our thought consists of. Another suggestion is to look at meanings as brain states which involves intentionality. An alternative to the three previous enterprises is the view that a word’s meaning consists in the way we use it. This view has been adopted by behaviorist psychologists such as Skinner (1957), and linguists such as Bloomfield (1933). Despite its success, the principal objection against use theories of meaning is the mind-boggling variety of situations in which we may use linguistic forms.

2- Meaning and definition

In the second chapter, the author posits that an understanding of definition is a lynchpin for any attempt to develop a conceptual theory of word meaning. So, the definitions found in dictionaries result from a word-based, or semasiological approach to meaning. This approach starts with a language’s individual lexemes and tries to specify the meaning of each one. The other approach, the onomasiological one, has the opposite logic where it starts with a particular meaning, and lists the various forms available in the language for its expression.

In this regard, Riem discusses the units of meaning and the minimal meaning-bearing units of language. So, the problem of wordhood can be approached at two levels:

Phonological rules and processes: where stress is a good indicator of the phonological word.

Grammatical level: cohesiveness, fixed order and conventionalized coherence and meaning. However, the level of grammatical structure a meaning should be attributed to may be problematic, and boundary cases, where meanings seem to straddle several grammatical units, occur frequently.

The author then moves to the ways of defining meaning which are:

Real and nominal definition: according to Aristotle, a definition (horismos) has two quite different interpretations: in defining,’ says Aristotle, ‘one exhibits either what the object is or what its name means’. We can therefore consider a definition either as a summation of the essence or inherent nature of a thing (real definition; Latin res ‘thing’), or as a description of the meaning of the word which denotes this thing (nominal definition; Latin nomen ‘name, noun’).

Definition by ostension: by pointing out the objects which they denote.

Definition by context: which is to situate the word in a system of wider relations through which the specificity of the definiendum can be seen.

Definition by genus and differentia: which is the theory that Aristotle developed in the Posterior Analytics. According to Aristotle, definition involves specifying the broader class to which the definiendum belongs (often called the definiendum genus), and then showing the distinguishing feature of the definiendum (the differentia) which distinguishes it from the other members of this broader class.

It is important here to mention that a minimum requirement on a term’s definition to check its accuracy is that the substitution of the definiens for the definiendum should be truth preserving in all contexts. However, one of the most frequent criticisms of definitional theories of semantics is that no satisfying definition of a word has ever been formulated.

3- The scope of meaning I: external context

In the third chapter, the author talks about the relation of meaning with external contexts, which is an essential element to have an adequate description of meaning. One of the most basic types of context is the extralinguistic context of reference, which concerns the entities an expression is about. It was the German logician and philosopher of mathematics Gottlob Frege (1848–1925) who first saw the significance of this distinction between an expression’s referent and its sense.Frege conceptualized three aspects of a word’s total semantic effect:

There are certain types of expressions, called deictic or indexical expressions (or deictics or indexicals), which we can define as those which refer to some aspect of the context of utterance as an essential part of their meaning and among them we can mention:

Person deixis: by which speaker (I), hearer (you) and other entities relevant to the discourse (he / she / it / they) are referred to.

Temporal deixis: (now, then, tomorrow)

Discourse deixis: which refers to other elements of the discourse in which the deictic expression occurs (A: You stole the cash. B: That’s a lie).

4- The scope of meaning II: interpersonal context

In the fourth chapter, the author introduces the interpersonal context in the scope of meaning, starting by the elocutionary force and speech acts.Givón (1984: 248), in this context, noted that the fundamental role of assertion in language can be seen because of four large-scale features of human social organization:

• Communicative topics that are often outside the immediate, perceptually available range.

• Much pertinent information is not held in common by the participants in the communicative exchange.

• The rapidity of change in the human environment necessitates periodic updating of the body of shared background knowledge.

• The participants are often strangers.

In the same realm and in his attempt to identify acts present in utterances, Austin divided and defined acts as follows:

• Locutionary act: the act of saying something.

• Illocutionary act: the act performed in saying something.

• Perlocutionary act: the act performed by saying something.

The author then moves to speaker’s intention and hearer’s inference, where the idea that conventions underlie the illocutionary force of utterances has been much criticized. The major problem with such a theory is that it proves to be difficult to state what the convention behind any speech act might be. Added to that, linguistic communication is an intentional-inferential process, in which hearers try to infer speakers’ intentions on the basis of the ‘clues’ provided by language.

It is necessary to separate those aspects of an utterance’s effect with its use in a particular context from those created by the meanings of its constituent elements. For this purpose, we have the notion of conversational implicature proposed by Grice.

The implicatures of an utterance are what it is necessary to believe the speaker is thinking, and intending the hearer to think, to account for what they are saying. Also, conventional

implicatures are what we might otherwise refer to as the standard meanings of linguistic expressions, and these conversational implicatures are those that arise in particular contexts of use, without forming part of the word’s characteristic or conventional force. Grice started the famous Gricean maxim which are:

The maxim of quality.

The maxim of quantity.

The maxim of relevance.

The maxim of manner.

However, the Gricean maxims must not be seen as universal principles governing the entire range of human conversational behavior, since conversational maxims seem to vary situationally and cross-culturally, and the set of maxims operative in any culture is a matter for empirical investigation.

In the end of the chapter, the author mentions relevance, which is an important notion in the Gricean enterprise developed by Sperber and Wilson. For them language use is an ostensive-inferential process where the speaker ostensibly provides the hearer with evidence of their meaning in the form of words and, combined with the context. This linguistic evidence enables the hearer to infer the speaker’s meaning. Every utterance for Sperber and Wilson communicates a presumption of its own optimal relevance.

5- Analyzing and distinguishing meanings

In the fifth chapter, the author moves to the analysis of meaning and semantic relations that glue them together in which knowing an expression’s meaning does not involve knowing its definition or inherent semantic content.

Relationships like synonymy, antonymy, meronymy, and so on all concern the paradigmatic relations of an expression, or the relations which determine the choice of one lexical item over another. These lexical relations are:

Meronymy (Greek meros: ‘part’) is the relation of part to whole: hand is a meronym of arm, seed is a meronym of fruit, blade is a meronym of knife.

Hyponymy (Greek hypo- ‘under’) is the lexical relation described in English by the phrase kind / type / sort of.

Taxonomy shows five ranks, each of which includes all those below it and every rank in the hierarchy is thus one particular kind of the rank above it.

Synonymy is a context-bound phenomenon where two words are synonyms in a certain given context, whereas for others it is context-free. If two words are synonymous they are identical in meaning in all contexts.

We should know that semantic relations reveal aspects of meaning is one motivation for a componential approach to semantic analysis. Thus, The information in componential analysis is similar to the information contained in a definition. In principle, anything that can form part of a definition can also be rephrased in terms of semantic components. Its embodiment in binary features (i.e. features with only two values, + or −) represents a translation into semantics of the principles of structuralist phonological analysis, which used binary phonological features like [± voiced], [± labial] [± nasal], etc.

The author then moves to the term polysemy, which is reserved for words like ‘pièce’ that show a collection of semantically related senses. We can, therefore, define polysemy as the possession by a single phonological form of several conceptually related meanings. A word is, in that sense, monosemous if it contains only a single meaning. For that can be tested by means of:

The definitional test: that identifies the number of senses of a word with the number of separate definitions needed to convey its meaning accurately.

Logical test: A word (or phrase) is polysemous on this test if it can be simultaneously true and false of the same referent.

One possible alternative to the view of words having a determinate and finite number of senses would be to think of a word’s meaning as a continuum of increasingly fine distinctions open to access at different levels of abstraction

6- Logic as a representation of meaning

In the sixth chapter, the author heads to logic, which is the study of the nature of valid inferences and reasoning. Logic is important to linguistics for at least three reasons:

The study of logic is one of the oldest comprehensive treatments of questions of meaning.

Logical concepts inform a wide range of modern formal theories of semantics and are also crucial in research in computational theories of language and meaning.

Logical concepts provide an enlightening point of contrast with natural language.

One of the crucial elements in logic is the notion of proposition, which is something that serves as the premise or conclusion of an argument. In that regard, there are different logical operators such as conjunction, disjunction and material conditional. Despite its instrumentality, many linguists maintain that discontinuities between natural language and logic are to be explained by the fact that natural languages possess a pragmatic dimension, which prevents the logical operators from finding exact equivalents in ordinary discourse.

Reference and truth are considered as the semantic facts when it comes to logical approaches to semantics. Chierchia and McConnell-Ginet (2000: 72) said that, ‘if we ignore the conditions under which S [a sentence] is true, we cannot claim to know the meaning of S. Thus, knowing the truth conditions for S is at least necessary for knowing the meaning of S.’

Riem also discusses here the relation between propositions which we can summarize as follows:

• whenever p is true, q must be true.

• A situation describable by p must also be a situation describable by q.

• p and not q is contradictory (can’t be true in any situation)

Presupposition: a proposition p presupposes another proposition q if both p and the negation of p entail q.

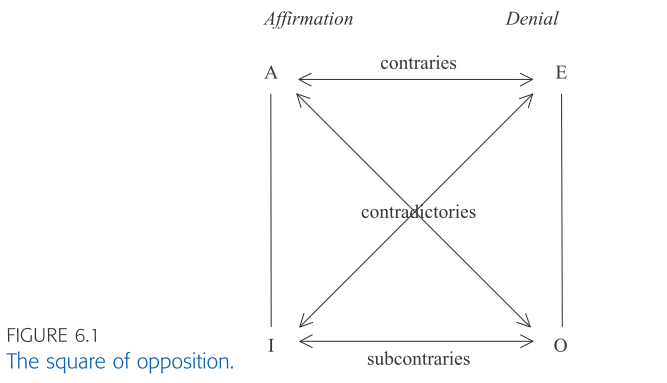

Contradictories, contraries, and subcontraries: contradiction is a pair of propositions with opposite truth values.

The author ends the chapter with the remark that despite that logic is useful for language, there seem to be areas of clear incompatibility between logical constructs and the natural language terms which partly translate them.

7- Meaning and cognition I: categorization and cognitive semantics

In the seventh chapter, the author considers the meaning from a cognitive perspective, where he starts with the idea that words in natural language can be seen as categories. In fact, categorization is an important topic in semantics because we can see language as a means of categorizing experience.

As seen in chapter 6, standard logical approaches to language are two-valued approaches. This means that they only recognize two truth values, true and false. However, another way of describing this view is the idea that definitions are lists of necessary and sufficient conditions for particular meanings. Aristotelian categories have the following two important characteristics:

The conditions on their membership can be made explicit by specifying lists of necessary and sufficient conditions.

Their membership is determinate:whether or not something is a member of the category can be checked by seeing whether it fulfills the conditions.

Also, the author postulates that category membership is graded and is at the heart of the prototype theory of categorization, which is most associated with the psychologist Eleanor Rosch and her colleagues. However, there are some problems when it comes to prototype categories in terms of attributes:

attributes can often only be identified after the category has been identified.

attributes are context-dependent.

there are many alternative descriptions of the attributes of a category.

Riem then moves to the various approaches cognitive semantics covers which are characterized by a holistic vision of the place of language within cognition. For many investigators, this involves the following commitments:

• A rejection of a modular approach to language: which assumes that language is one of several independent modules or faculties within cognition.

• An identification of meaning with conceptual structure.

• A rejection of the syntax–semantics distinction.

• A rejection of the semantics–pragmatics distinction.

Despite the practicality of cognitive semantics, there are some problems with it:

• The ambiguity of diagrammatic representations.

• The problem of determining the core meaning.

• The indeterminate and speculative nature of the analyses.

8- Meaning and cognition II: formalizing and simulating conceptual representations

In the eighth chapter, the author looks at some attempts to formalize the conceptual representations of the language. According to Jackendoff, conceptual semantics aims to situate semantics ‘in an overall psychological framework, integrating it not only with linguistic theory but also with theories of perception, cognition and conscious experience’. Jackendoff claims that a decompositional method is necessary to explore conceptualization.

The author confirms that computer technology has taken on great importance in linguistics generally since the 1970s, and semantics is no exception. Computer architectures certainly are the closest simulations available for the complexity of the brain, but they still vastly underperform humans in linguistic ability.

One of the topics related to lexicon is word-sense disambiguation which can identify two main approaches to word-sense disambiguation in computational linguistics:

Selectional restriction approach that generates complete semantic representations for all the words in a sentence and then eliminates those which violate selectional restrictions coded in the component words.

The other approach uses the immediate context of the target word as a clue in identifying the intended sense.

It is important to mention Pustejovskian semantic approach, dubbed as the generative lexicon approach, that claims to solve many of the problems by adopting a different picture of lexical information. Pustejovsky criticizes the standard view of the lexicon, on which we associate each lexeme with a fixed number of senses.

9- Meaning and morphosyntax I: the semantics of grammatical categories

In the ninth chapter and the chapter that follows, the author tackles some semantic phenomena related to morphosyntax. Talking about the semantics of parts of speech is analyzing a language grammatically and involves analyzing it into a variety of elements as well as structures such as phonemes, morphemes, and words, and, within the words, syntactic categories of various types. Among these categories there are the parts of speech (also known as lexical or grammatical categories): noun, verb, adjective, determiner, and so on. However, parts of speech systems vary among the languages of the world. In a typical sentence of any language, we can identify constituents which are translated by, and seem to function similarly, nouns and verbs in English or other familiar languages. Another delimiting parts of speech is based on:

Morpho-distributional criteria: A distributional approach to parts of speech classifies parts of speech on the basis of the way they are in sentences. One way of defining these patterns is to advance sample contexts which can serve as test frames.

Semantic criteria: one obvious way of delimiting the categories is based on semantic criteria. In other words, on the basis of commonalities in the meanings of words in any class.

The author moves on to the semantics of morphosyntactic categories such as tense, time, and aspect. Due to the difficulty of fathoming the notion of time, Saint Augustine (354–430 AD) once said: ‘What then is time? If no one asks me, I know what it is. If I wish to explain it to him who asks, I do not know.’ Tense is generally the name of the class of grammatical markers used to signal the location of situations in time and one hallmark of English in tense is the availability of a contrast between the simple past or preterite tense and the present perfect. This perfect is often explained as conveying the continuing relevance of the past action. Another morphosyntactic category is the concept of aspect. In that line, different tenses show different locations of the event in time and different aspects show different ways of presenting time within the event itself as flowing, or as stationary. The aspectual system is about how the internal temporal constituency of an event is viewed and it’s about whether the event is viewed from the distance, as a single unanalysable whole, with its beginning, middle and end foreshortened into one, or from close-up, so that the distinct stages of the event can be seen individually.

10- Meaning and morphosyntax II: verb meaning and argument structure

In the tenth chapter, the author moves from word level to clause level and the relation between a verb and its participants, often called argument structure. Verbs are the core of the clause and play an important role in the interaction between meaning and syntax. This is because verbs are typically accompanied by nouns which refer to the participants in the event and these participants receive a range of morphosyntactic markers. One challenge of a verb and its participants is, in fact, a problem syntax-semantics interface, or generative grammar. This latter imposed strict division between different components of the grammar: syntax, phonology, semantics and so on, and each of which is assumed to have its own explanatory principles and structure. It was assumed that the plausible arguments of all verbs could be classified into a few classes, called thematic roles, participant roles, semantic roles or theta roles.

11- Semantic variation and change

In the eleventh chapter, the author talks about semantic variation, synchronic and diachronic, and the resulting change in meaning. The author starts by mentioning the difference between universality, variation of sense and universality, and variation of reference. In addition to the previous point, the author talks about the problems of the appropriate metalanguage in undertaking cross-linguistic or historical semantic study. Having said that, one of the important characteristics of semantic change is that it crucially involves polysemy and we have to understand that a word does not suddenly change from meaning A to meaning B in a single move. Instead, the change happens via an intermediate stage in which the word has both A and B among its meanings. Thus, early studies of semantic change set up broad categories of change described with general labels like ‘weakening’, ‘strengthening’ and so on. One of these categories is grammaticalization that can be defined as the process by which open-class content words turn into closed-class function forms by losing elements of their meaning, and by a restriction in their possible grammatical contexts.

The author then moves to semantic typology that focuses on the question of cross-linguistic regularities in denotation or extension. Semantic typology also attempts to answer questions related to the range of variation shown by the world’s languages in words for colours, body parts, and basic actions and whether there are any universal or widespread similarities in the boundaries between different words in each of these domains. Interestingly, despite the fact that body parts being, per se, universally shared, cross-linguistic investigation reveals that there is vast variety in the extensions of human body-part terms and different languages divide color in various incompatible ways

Evaluation

The Victorian historian Thomas Carlyle once said, reflecting on the worthlessness of economics:”Not a "gay science", I should say, like some we have heard of; no, a dreary, desolate and, indeed, quite abject and distressing one; what we might call, by way of eminence, the dismal science’’. That view towards economics is often shared by some learners studying semantics since it is a blend of logic, philosophy, mathematics, and language. This shared feeling that semantics is a dismal science may originate from the fact that it may be challenging for some to see how its concepts fit in real world problems. However, in today’s world, semantics plays a crucial role in many fields such as technology where researchers depend on it a lot in artificial intelligence, which aspires to simulate and clone human behavior and unique feature that distinguishes him from other creatures: language.

Introducing Semantics is one of the must-read references for linguistics majors because it provides a sound overview of this science that is widely used in natural language processing, voice recognition, logic and so on. Even though the book is meant to be an introductory textbook for learners, it may not be suitable for those who have just entered the world of linguistics in general and semantics in particular. This is due to its digression in some chapters which may not be useful for a first timer. Therefore, the book is considered to be a good read for intermediate majors, junior researchers, those who are in their graduate degrees in Linguistics, or a hardworking undergraduate student. The book also assumes that a reader should have some background in linguistics and by that it would be a perfect fit for those who have good knowledge about logic and philosophy, which is in itself a shortcoming in the book. In saying so, the book should have given an adequate introduction to philosophy and logic to make the job easier for the reader to follow along with the concepts and dynamics of semantics explained in the book. Also, the book is a good companion for those who want to study semantics by themselves thanks to the activities provided by the end of each chapter. The author also provides the reader with references and extra readings that they can refer to for more in-depth understanding of the concepts tackled in the book. However, I think that it would have been more instrumental if there were systematic answers to the questions and how a semanticist would approach them as it is challenging for many students to put theory into practice. As a way of strengthening the readers’ understanding, it provides a plethora of examples from different world languages in an attempt to objectify and universalize semantic phenomena found throughout different languages. One perk that book offers at the end is a glossary that contains semantic and linguistic terms mentioned in the book.

Since the author has stated that the present book is designed for undergraduate students, it wouldn’t be wise for non linguistics majors who need or want to study semantics such as informatics students, philosophy majors and mathematics to start with this book. Thus, the present book is exclusively for linguistics majors who are in their second or third year.

The date of the publication of the book is 2010, and therefore 20 years has passed since its publication, which means that some of its data may be outdated given the fact that this field is constantly changing.The book instigates research through some questions provided in the activities that require critical thinking and points out to questions that are yet to be answered by researchers.

About the reviewer:

Mehdi ZOUAOUI is a lecturer at Istanbul University with an interest in general linguistics, education, E-learning, and global affairs. He’s a frequent article writer on E-learning affairs.

Footnotes

1- Semasiology (from Greek: σημασία, semasia, "signification") is a discipline of linguistics concerned with the question "what does the word X mean?". It studies the meaning of words regardless how they are pronounced.[1] It is the opposite of onomasiology, a branch of lexicology that starts with a concept or object and asks for its name, i.e., "how do you express X?" whereas semasiology starts with a word and asks for its meanings. "Semasiology - Wikipedia." https://en.wikipedia.org/wiki/Semasiology. Accessed 20 Dec. 2020.

2- The rationale of this requirement is the principle of identity under substitution articulated by the seventeenth-century German philosopher Leibniz: eadem sunt, quae sibi mutuo substitui possunt, salva veritate (Latin for ‘things are the same which can be substituted one for the other with truth intact’).

3- The most comprehensive attempt to model lexical knowledge on a computer is the WordNet project, which has been running since 1985. WordNet is an online lexical database which sets out to represent and organize lexical semantic information in a psychologically realistic form that facilitates maximally efficient digital manipulation.

4- "Generative lexicon - Wikipedia." https://en.wikipedia.org/wiki/Generative_lexicon. Accessed 5 Nov. 2020.

5- "The dismal science - Wikipedia." https://en.wikipedia.org/wiki/The_dismal_science. Accessed 7 Nov. 2020.

Appendix

Figure 01 (Introducing Semantics, Nick Riemer):

Figure 02: Semiotic Triangle (Introducing semantics, Nick Riemer):

Figure 03: Classification of definition (Introducing semantics, Nick Riemer)

Figure 04: The square of opposition

Source: Introducing Semantics (Nick Riemer)

Figure 05: Typology of tense-aspect interaction

Source: Introducing Semantics (329)